The Total Least Squares (TLS) problem is a well known technique for solving the following over-determined linear systems of equations

in which both the matrix and the right hand side are affected by errors. We consider the following classical definition of TLS problem.The Total Least Squares problem with data and is given by,

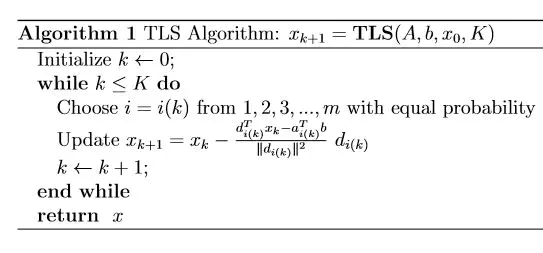

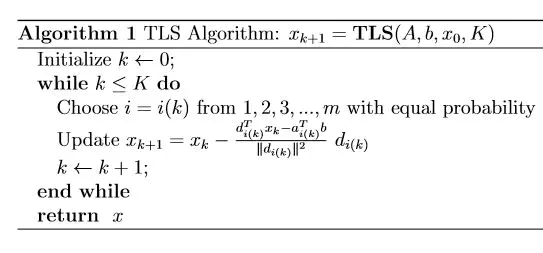

where and . Here, denotes the Frobenius matrix norm and denotes the matrix whose first columns are the columns of , and the In various engineering and statistics applications where a mathematical model reduces to the solution of an over-determined, possibly inconsistent linear equation , solving that equation in the TLS sense yields a more convenient approach than the ordinary least squares approach, in which the data matrix is assumed constant and errors are considered right-hand side . In this project, we derived a iterative algorithm (see Algorithm 1 above) for solving Total Least Square problem based on randomized projection.

Photo by rawpixel on Unsplash

Photo by rawpixel on Unsplash

Photo by rawpixel on Unsplash

Photo by rawpixel on Unsplash